I participated in my first European Hackathon TechFestMunich on September 2017. This was unlike any of the other previous hackathons I’ve attended back in India and I did not have my trusty teammate Arjun with me. Also, I finally got to work on Augmented Reality.

TechFestMunich is hosted by UnternehmerTUM, the center for innovation and business creation at the Technical University of Munich, Germany. I think this is one of Europe’s hottest hackathons after HackZurich. This year they chose only 300 participants most of whom were working professionals. Since the venue was a business incubation center, their labs were loaded with tech!

Prior to the event, the organizers setup a Slack channel to discuss the projects and find potential teammates. I didn’t really find any of the offered projects interesting. Meanwhile some of the other contestants were pitching their own ideas on Slack hoping for people to join them. One of them was André Kovac, he was pitching some really intriguing projects on Augmented Reality(AR) to visualize equations. I’ve always wanted to work on AR but I never really got the opportunity or maybe I just never wanted to let go of Android and since my teammate wasn’t around either, I figured I’d take a shot in the dark with this one! So I pinged André and we decided to team up.

DAY 1 –“It’s going to be really difficult to build this in 2 days!”

I met up with André right after breakfast and we started discussing our game plan. This was when I came to know that he hadn’t actually worked with AR as well! So we needed to know what AR could and could not do before even thinking about the application. So we went to attend Microsoft’s workshop on Mixed Reality using the Holo lens, to understand what it was all about.

There’s Virtual Reality and Augmented Reality, so what’s Mixed Reality?

I’ll try to put this in the best way that I can. Virtual Reality is when you don’t see the physical world, you are completely inside a virtual environment. The experience that you get with Google Cardboard and Oculus Rift are examples of Virtual Reality. Augmented Reality is when you see virtual objects on top of the physical world. Mixed Reality(MR) is also Augmented Reality but they are much more life-like, the virtual objects placed in Mixed Reality are more accurately anchored within the physical world and are more interactive with the physical world, the best example is Pokémon Go. AR and MR are essentially conveying the same idea. The term ‘Mixed Reality’ was made up by Microsoft in an effort to make it less confusing to the public.

The Holo lens is Microsoft’s vision for Augmented Reality, they call it the world’s first self-contained holographic computer. It can overlay graphic content that is nearly opaque on top of your vision. It looks pretty rad but it’s not the most comfortable thing to wear. The team from Microsoft demonstrated the Holo Lens using a simple application built in Unity where you place an object in the physical world, you walk away from it and come back to find that it’s still there.

THE IDEA

The idea was to create an AR application to teach sign language. Back in 2016, my mentor Prof. Geetha suggested a project for my Bachelor’s Thesis, it was to build an Android application that could teach sign language using a 3D avatar, the application should be able to convert text into sign language gestures. But then I never took up the project due to limited resources and time constraints. However, with the virtually unlimited resources available at the hackathon, it seemed like the best place to implement this idea.

The added advantage with AR is that the user would be able to view the gestures from his own perspective. i.e when learning sign language, we require an instructor to show us these gestures in front of us, but because the instructor’s left now becomes our right and vice versa, this causes a difficulty to comprehend compound gestures involving both the hands. With AR, we can augment the instructor’s hand on top of our normal vision without having to invert the perspective, so that we can see for ourselves how our hands should move right in front of us. You can see below how I’m easily able to follow the virtual hand. (Note that the virtual hand is not following my gesture!)

I discussed this idea with André and he liked it too. We met Rafael, he was the coordinator of Roboy and someone suggested that we take this idea to him to see if it was plausible. After hearing the idea, he said it was very good and he liked it. Then he asked us a few questions.

-Which one of you is the expert in sign language?

-We looked at each other and told him we didn’t know sign language.

-Okay, so which one of you has worked on AR before?

-Again, we looked at each other and told him we haven’t worked on AR before.

-Then it’s going to be really difficult to build this in 2 days!

He then informed us about a Unity workshop that was happening in the afternoon, conducted by two game developers and he told us that they have the motion capturing suit which we could use to record the sign language gestures, that was just what we needed. The first thing we did was to reserve our workspace, the crew had already setup 2 workstations for the Holo Lens that had all the necessary tools pre-installed. We laid our jackets, backpacks and coffee cups just to give the impression that it was taken! We then went to the hardware library and got our own Holo Lens and someone happened to take a picture of it.

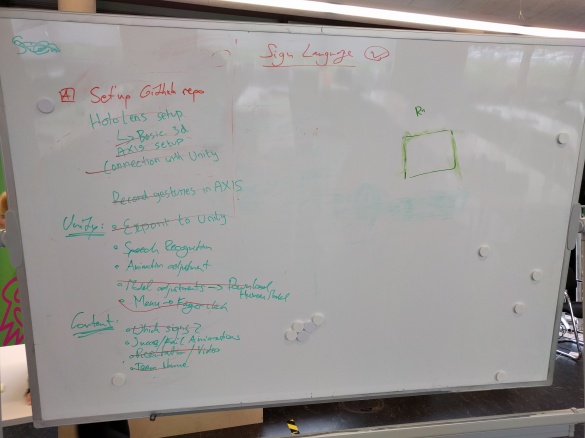

Next up was the ideation phase for the AR/VR projects. They made us discuss our ideas with others in pairs and in groups. After a couple of rounds of that, we were asked to jot down our ideas on a sticky note and have them put on a board. We then had to pitch the idea in front of everyone in under 30 seconds. This was when Franz and Saqib joined us.

The Unity workshop was really helpful. They showed us how they animate 3D avatars in their games using a motion capturing suit. After the workshop, we pitched the idea to them, they were quite impressed. They offered to help us with recording the sign language gestures, they did not bring the complete suit for the workshop so it was not capable of picking finger movements just yet, but they promised to bring it the next day.

Then we started scribbling a roadmap and split the tasks. Andre and Saqib were going to setup the Holo lens and deploy a sample application. Franz was going to learn some sign language gestures. Meanwhile I returned home to learn Unity.

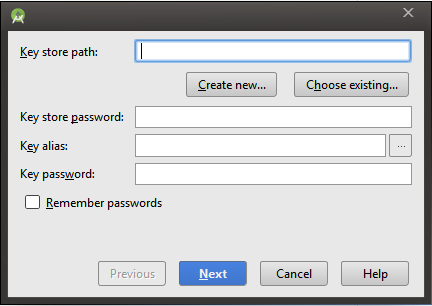

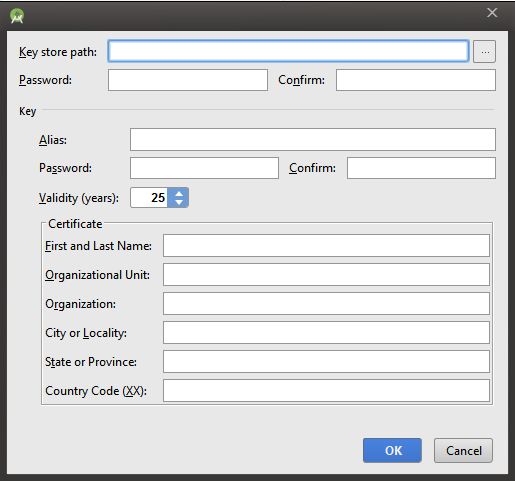

DAY 2 – Sleeping at 5 in the morning

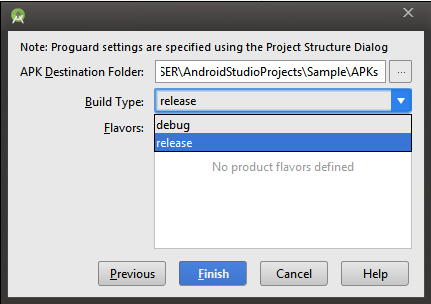

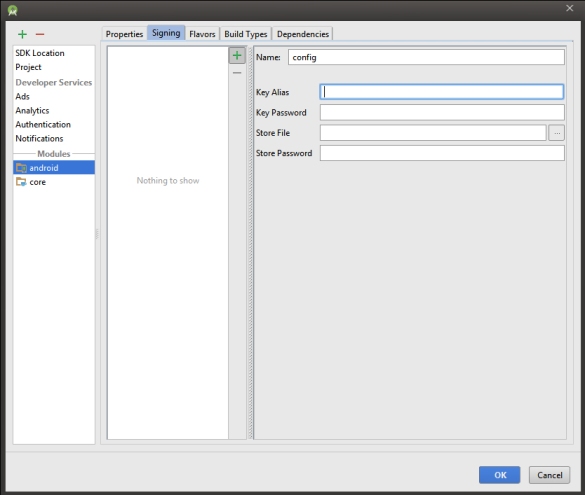

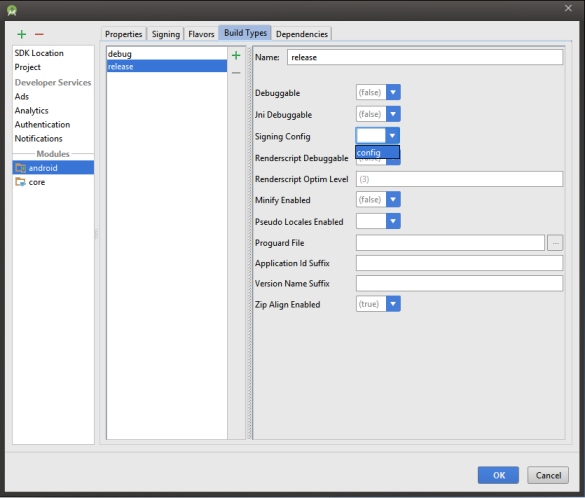

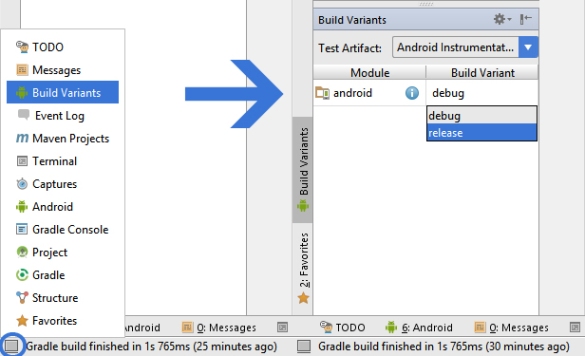

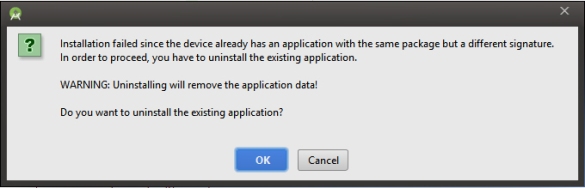

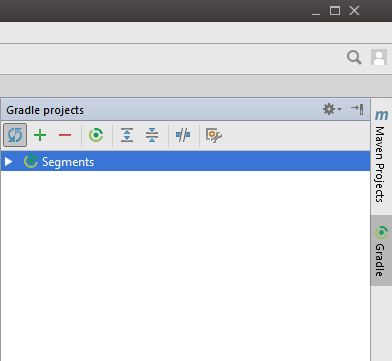

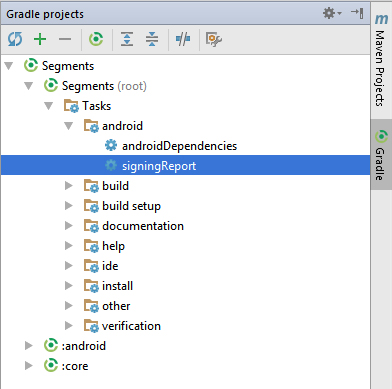

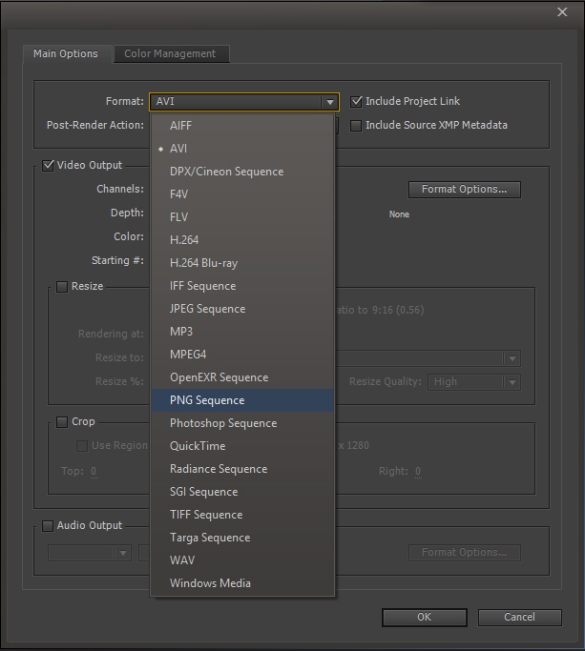

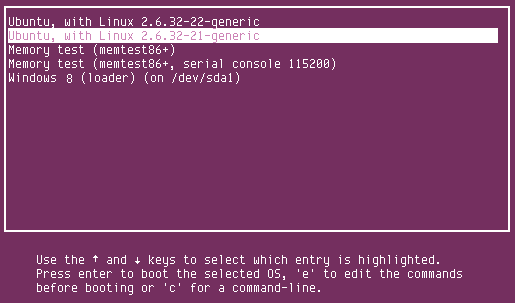

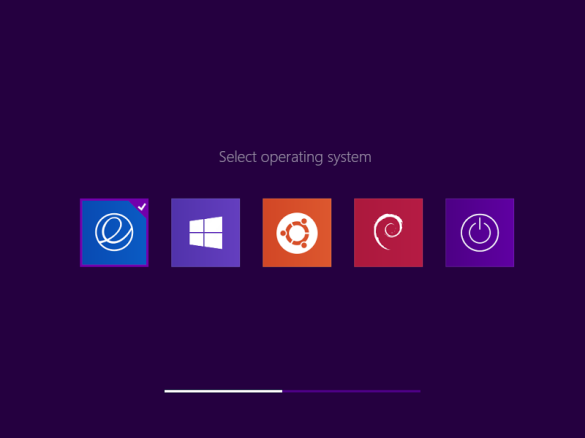

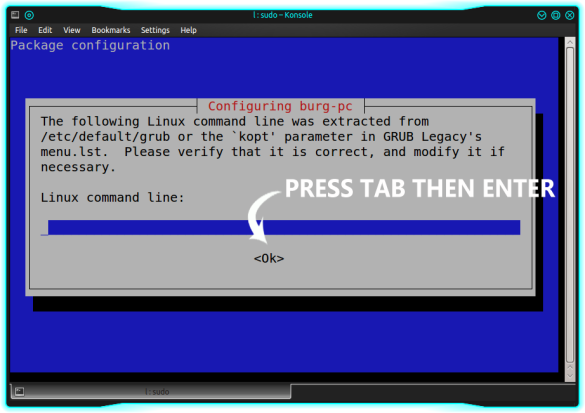

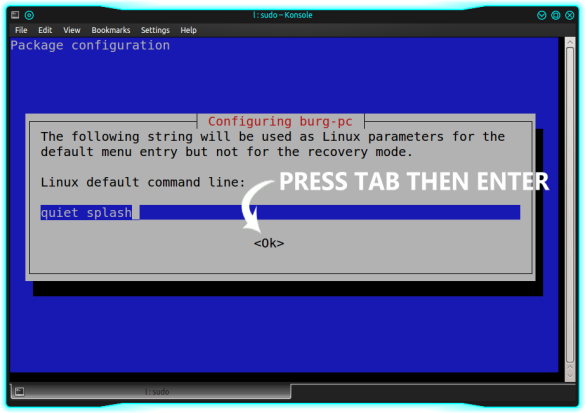

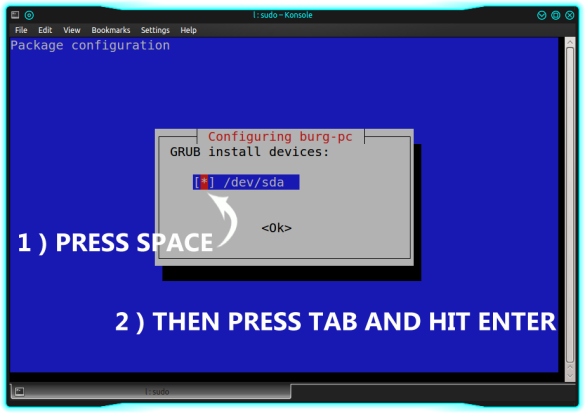

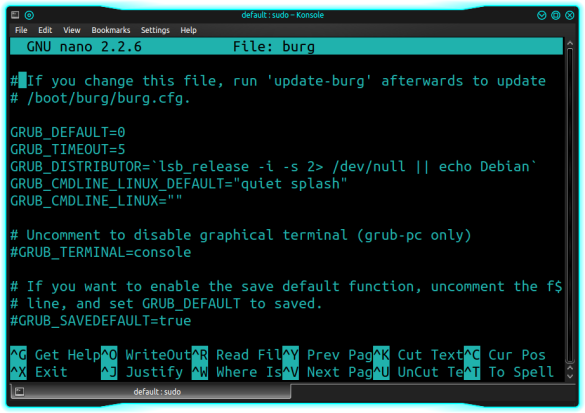

I knew this day was going to be the game changer, so I came in prepared, packed an extra pair of clothes, a toothbrush and some fruits. Andre and Saqib had already deployed the sample application on the Holo Lens that displayed a 3D cube. Then Saqib showed me the ritual that had to be performed every time to deploy an application from Unity to the Holo lens. It involved a couple of steps for configuring the build with some special parameters, generating the build, running that build on Visual Studio and then deploying it with on the Holo lens. I did not ask a single question!

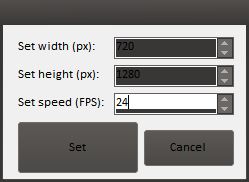

The game developers showed up, it was time for Franz to suit up. We were using the Perception Neuron motion capturing suit, it’s widely used in animated movies and games. The suit pairs up with a bluetooth hotspot that interfaces to the computer via USB to their software AXIS Neuron.

First up, the suit had to be calibrated by standing upright, sitting down, bending the knees etc. This was when we identified a weird issue with the avatar’s movements. Since we needed to capture only the hand movements, we only placed the sensors on the upper body, however this made the avatar’s movements seem unnatural.

We then placed the sensors on his legs and after a couple of calibrations, we were good to start recording some gestures.

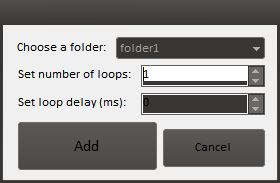

This was really the most coolest and fun part of this whole project. We recorded the gestures for a few words, sentences and also a waiting animation for when the avatar would be idle.

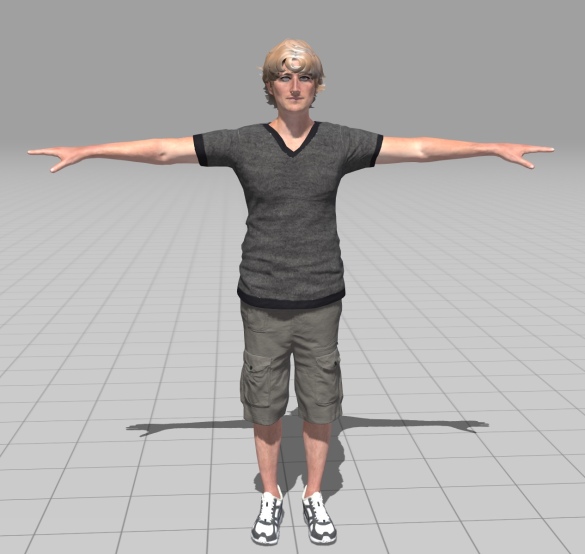

Then we had to apply these animations to a 3D human avatar in Unity. Fortunately the AXIS Neuron software could export the animations in fbx format which is accepted by Unity. We started looking for 3D human avatars, a google search did not do any good! Most of the what we found were either not rigged or expensive or plain naked and we were not planning on showing a naked man on stage! (A rigged model is one that has its joints defined like the elbow joint, the knee joint etc. These joints have to match with the joints defined in the recorded animation, only then the animations can be applied. A standard human rig is called the humanoid rig) Unable to find any rigged human avatar I approached one of the game developers and he pointed me to the perfect website that had free rigged 3D human avatars and I chose this dude because he was the only neutral looking character of the lot.

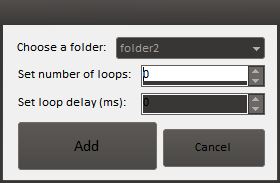

Now that we had everything, it was my job to put everything together. Franz started working on the presentation and Saqib stayed to help me. The proposed flow was, the avatar would show the gesture for a sentence/word standing in front. Then when the user clicked on a “TRY” button, the camera would shift to the avatar’s perspective and the animation would be replayed. I put together a simple UI and started off by writing a state machine which I usually use in my games. It triggered the animations on the human avatar one by one using a button click.

When we deployed the application into the Holo lens, we could not click the button using the system wide click gesture. We later found out that a package had to be manually added, after referring a couple of forums we were able to get it working.

Once the avatar could show the gestures, the next part was to replay the gestures from the avatar’s perspective. This meant we had to move the camera from its initial position to the avatar’s face. André pointed out that the camera should animate its way to his face rather than changing its angle abruptly, so that people could understand what was happening. After about an hour, we could achieve what we were hoping for.

Then we had to add the camera animation and reverse animation in between each state which at one point got too confusing. I realized that I was over-complicating it with too many redundant states, so I wrote everything down on a piece of paper and had Saqib follow the states along with the application. At close to 5am we were done! Here’s the working demo.

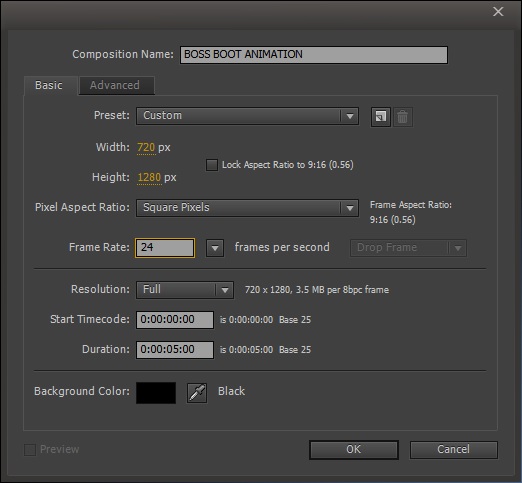

DAY 3 – THE PITCH

Franz prepared a fantastic presentation for the pitch, he came up with the name LARS for the 3D avatar, in short for Augmented Reality Sign Language teacher (yes, we know the letters don’t make sense). We started discussing our presentation strategy, our plan was that Franz and Saqib would start off by selling the idea, Andre would then explain how it works while I present the live demo wearing the Holo lens on stage. Microsoft provides a web-based portal to stream the Holo lens display in real time over Wi-Fi and we were going to use it to show the live demo.

We applied for two categories so we got to make two pitches. The first category was for all kinds of innovative projects, and this pitch was perfect, the audience were totally sold! They were completely immersed in the live demo and we got a lot of cheer and applause.

We were up for the second pitch, this was the pitch for the VR/AR category, this was the one that mattered. Everything went according to plan and it was time for the live demo. LARS showed the first gesture and it was time for me to try it, I clicked the “TRY” button, the camera shifted its position to LARS’s perspective and his hands were showing the gesture perfectly but I noticed there was no response from the crowd, when I looked back into the screen, his hands were not appearing in the stream even though I could see it on the Holo lens. So I switched to the next gesture and again his hands did not appear!

That was such a bummer and it ruined the whole presentation. Later we discovered that the issue lied within the stream, it was buggy when the network was poor, it sometimes failed to render all the elements.

We couldn’t make it to the finals after that disaster but many who saw our first pitch and witnessed our all-nighter coding session talked to us about the project and told us that they hoped we would win. That was the really the most rewarding moment! I was really amazed at how far we could get without even having any prior experience in AR. We couldn’t have done it without the help of so many people especially Rafael and the two game developers whose names unfortunately I cannot recollect. It was really interesting to work on AR and I can’t wait to get my hands on the Holo lens again!

Saqib, André, Franz and me